使用ceph-deploy快速部署ceph¶

Ceph介绍¶

Ceph是统一存储系统,支持三种接口:

Object:有原生的API,而且也兼容Swift和S3的API

mitaka:支持精简配置、快照、克隆cephceph

File:Posix接口,支持快照

Ceph也是分布式存储系统,它的特点是:

高扩展性:使用普通x86服务器,支持10~1000台服务器,支持TB到PB级的扩展。

高可靠性:没有单点故障,多数据副本,自动管理,自动修复。

高性能:数据分布均衡,并行化度高。对于objects storage和mitaka storage,不需要元数据服务器。

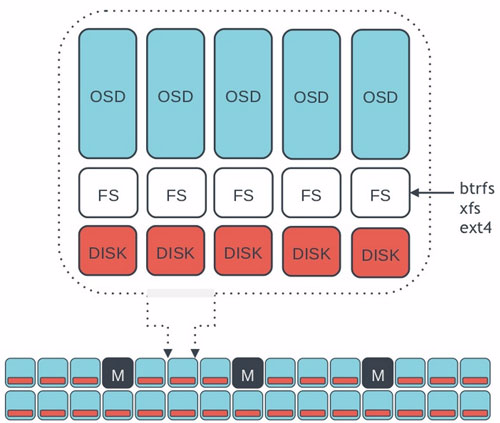

ceph架构¶

环境准备¶

实验环境主机集群(CentOS 7.3)¶

主机 |

IP |

功能 |

|---|---|---|

node-1 |

10.188.169.121 |

mon,osd-0 |

node-2 |

10.188.169.120 |

osd-1 |

node-3 |

10.188.169.122 |

osd-2 |

完成状态:

[root@node-1 ceph-deploy]# ceph -s

cluster 4b5f675e-f8b0-4790-abf6-82804c6afbd1

health HEALTH_OK

monmap e1: 1 mons at {node-1=10.188.169.121:6789/0}

election epoch 3, quorum 0 node-1

osdmap e15: 3 osds: 3 up, 3 in

flags sortbitwise,require_jewel_osds

pgmap v28: 64 pgs, 1 pools, 0 bytes data, 0 objects

100 MB used, 284 GB / 284 GB avail

64 active+clean

[root@node-1 ceph-deploy]# ceph osd tree

ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY

-1 0.27809 root default

-2 0.09270 host node-1

0 0.09270 osd.0 up 1.00000 1.00000

-3 0.09270 host node-2

1 0.09270 osd.1 up 1.00000 1.00000

-4 0.09270 host node-3

2 0.09270 osd.2 up 1.00000 1.00000

在所有节点上:¶

更新hosts信息

echo "10.188.169.121 node-1"

echo "10.188.169.120 node-2"

echo "10.188.169.122 node-3"

配置ssh信任

ssh-copy-id node-1

ssh-copy-id node-2

ssh-copy-id node-3

配置YUM

安装启用EPEL

sudo yum install -y yum-utils && sudo yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/ && sudo yum install --nogpgcheck -y epel-release && sudo rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 && sudo rm /etc/yum.repos.d/dl.fedoraproject.org*

创建 /etc/yum.repos.d/ceph.repo

[ceph-noarch] name=Ceph noarch packages baseurl=http://download.ceph.com/rpm-jewel/el7/noarch enabled=1 gpgcheck=1 type=rpm-md gpgkey=https://download.ceph.com/keys/release.asc

更新软件库并安装 ceph-deploy

sudo yum update && sudo yum install ceph-deploy

同步各个节点时间

yum -y install ceph ceph-radosgw ntp ntpdate ntpdate -s cn.pool.ntp.org echo ntpdate -s cn.pool.ntp.org>> /etc/rc.d/rc.local echo "01 01 * * * /usr/sbin/ntpdate -s cn.pool.ntp.org >> /dev/null 2>&1" >> /etc/crontab

清空防火墙策略,关闭selinux

iptables -F iptables -X sed -i 's/SELINUX=.*/SELINUX=disabled/' /etc/selinux/config #重启机器 systemctl stop firewalld systemctl disable firewalld

部署ceph集群¶

环境清理¶

如果之前部署失败了,不必删除ceph客户端,或者重新搭建虚拟机,只需要在每个节点上执行如下指令即可将环境清理至刚安装完ceph客户端时的状态!强烈建议在旧集群上搭建之前清理干净环境,否则会发生各种异常情况:

ps aux|grep ceph |awk '{print $2}'|xargs kill -9

ps -ef|grep ceph

#确保此时所有ceph进程都已经关闭!如果没有关闭,多执行几次。

umount /var/lib/ceph/osd/*

rm -rf /var/lib/ceph/osd/*

rm -rf /var/lib/ceph/mon/*

rm -rf /var/lib/ceph/mds/*

rm -rf /var/lib/ceph/bootstrap-mds/*

rm -rf /var/lib/ceph/bootstrap-osd/*

rm -rf /var/lib/ceph/bootstrap-mon/*

rm -rf /var/lib/ceph/tmp/*

rm -rf /etc/ceph/*

rm -rf /var/run/ceph/*

用下列命令可以连 Ceph 安装包一起清除:

ceph-deploy purge node-1 node-2 node-3

在部署节点(node-1)上创建ceph集群¶

创建部署目录

mkdir ceph-deploy

cd ceph-deploy

ceph-deploy new node-1

[root@node-1 ceph-deploy]# ceph-deploy new node-1

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.38): /bin/ceph-deploy new node-1

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] func : <function new at 0x1567578>

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x15cba28>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : True

[ceph_deploy.cli][INFO ] mon : ['node-1']

[ceph_deploy.cli][INFO ] public_network : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[node-1][DEBUG ] connected to host: node-1

[node-1][DEBUG ] detect platform information from remote host

[node-1][DEBUG ] detect machine type

[node-1][DEBUG ] find the location of an executable

[node-1][INFO ] Running command: /usr/sbin/ip link show

[node-1][INFO ] Running command: /usr/sbin/ip addr show

[node-1][DEBUG ] IP addresses found: [u'10.188.169.121']

[ceph_deploy.new][DEBUG ] Resolving host node-1

[ceph_deploy.new][DEBUG ] Monitor node-1 at 10.188.169.121

[ceph_deploy.new][DEBUG ] Monitor initial members are ['node-1']

[ceph_deploy.new][DEBUG ] Monitor addrs are ['10.188.169.121']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

See also

目录内容:

[root@node-1 ceph-deploy]# ll

-rw-r--r-- 1 root root 284 Jul 28 03:01 ceph.conf

-rw-r--r-- 1 root root 249470 Jul 28 03:24 ceph-deploy-ceph.log

-rw------- 1 root root 73 Jul 28 03:00 ceph.mon.keyring

根据自己的IP配置向ceph.conf中添加public_network,并稍微增大mon之间时差允许范围(默认为0.05s,现改为2s),并修改默认副本数

echo public_network = 10.188.169.0/24 >>ceph.conf

echo mon_clock_drift_allowed = 2 >> ceph.conf

echo osd_pool_default_size = 2 >> ceph.conf

[root@node-1 ceph-deploy]# cat ceph.conf

[global]

fsid = 4b5f675e-f8b0-4790-abf6-82804c6afbd1

mon_initial_members = node-1

mon_host = 10.188.169.121

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

osd_pool_default_size = 2

public_network = 10.188.169.0/24

mon_clock_drift_allowed = 2

安装ceph

ceph-deploy install node-1 node-2 node-3

Note

如果你执行过 ceph-deploy purge ,你必须重新执行这一步来安装 Ceph

Note

安装过程有时会报错 [ceph_deploy][ERROR ] RuntimeError: NoSectionError: No section: ‘ceph’,在报错节点yum -y remove ceph-release删除ceph

部署mon节点

ceph-deploy mon create-initial

[root@node-1 ceph-deploy]# ceph-deploy mon create-initial

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.38): /bin/ceph-deploy mon create-initial

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create-initial

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x29cc440>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mon at 0x29c1668>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] keyrings : None

[ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts node-1

[ceph_deploy.mon][DEBUG ] detecting platform for host node-1 ...

[node-1][DEBUG ] connected to host: node-1

[node-1][DEBUG ] detect platform information from remote host

[node-1][DEBUG ] detect machine type

[node-1][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.3.1611 Core

[node-1][DEBUG ] determining if provided host has same hostname in remote

[node-1][DEBUG ] get remote short hostname

[node-1][DEBUG ] deploying mon to node-1

[node-1][DEBUG ] get remote short hostname

[node-1][DEBUG ] remote hostname: node-1

[node-1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node-1][DEBUG ] create the mon path if it does not exist

[node-1][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-node-1/done

[node-1][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-node-1/done

[node-1][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-node-1.mon.keyring

[node-1][DEBUG ] create the monitor keyring file

[node-1][INFO ] Running command: ceph-mon --cluster ceph --mkfs -i node-1 --keyring /var/lib/ceph/tmp/ceph-node-1.mon.keyring --setuser 167 --setgroup 167

[node-1][DEBUG ] ceph-mon: renaming mon.noname-a 10.188.169.121:6789/0 to mon.node-1

[node-1][DEBUG ] ceph-mon: set fsid to 4b5f675e-f8b0-4790-abf6-82804c6afbd1

[node-1][DEBUG ] ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node-1 for mon.node-1

[node-1][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-node-1.mon.keyring

[node-1][DEBUG ] create a done file to avoid re-doing the mon deployment

[node-1][DEBUG ] create the init path if it does not exist

[node-1][INFO ] Running command: systemctl enable ceph.target

[node-1][INFO ] Running command: systemctl enable ceph-mon@node-1

[node-1][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node-1.service to /usr/lib/systemd/system/ceph-mon@.service.

[node-1][INFO ] Running command: systemctl start ceph-mon@node-1

[node-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node-1.asok mon_status

[node-1][DEBUG ] ********************************************************************************

[node-1][DEBUG ] status for monitor: mon.node-1

[node-1][DEBUG ] {

[node-1][DEBUG ] "election_epoch": 3,

[node-1][DEBUG ] "extra_probe_peers": [],

[node-1][DEBUG ] "monmap": {

[node-1][DEBUG ] "created": "2017-07-28 03:17:39.758385",

[node-1][DEBUG ] "epoch": 1,

[node-1][DEBUG ] "fsid": "4b5f675e-f8b0-4790-abf6-82804c6afbd1",

[node-1][DEBUG ] "modified": "2017-07-28 03:17:39.758385",

[node-1][DEBUG ] "mons": [

[node-1][DEBUG ] {

[node-1][DEBUG ] "addr": "10.188.169.121:6789/0",

[node-1][DEBUG ] "name": "node-1",

[node-1][DEBUG ] "rank": 0

[node-1][DEBUG ] }

[node-1][DEBUG ] ]

[node-1][DEBUG ] },

[node-1][DEBUG ] "name": "node-1",

[node-1][DEBUG ] "outside_quorum": [],

[node-1][DEBUG ] "quorum": [

[node-1][DEBUG ] 0

[node-1][DEBUG ] ],

[node-1][DEBUG ] "rank": 0,

[node-1][DEBUG ] "state": "leader",

[node-1][DEBUG ] "sync_provider": []

[node-1][DEBUG ] }

[node-1][DEBUG ] ********************************************************************************

[node-1][INFO ] monitor: mon.node-1 is running

[node-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node-1.asok mon_status

[ceph_deploy.mon][INFO ] processing monitor mon.node-1

[node-1][DEBUG ] connected to host: node-1

[node-1][DEBUG ] detect platform information from remote host

[node-1][DEBUG ] detect machine type

[node-1][DEBUG ] find the location of an executable

[node-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node-1.asok mon_status

[ceph_deploy.mon][INFO ] mon.node-1 monitor has reached quorum!

[ceph_deploy.mon][INFO ] all initial monitors are running and have formed quorum

[ceph_deploy.mon][INFO ] Running gatherkeys...

[ceph_deploy.gatherkeys][INFO ] Storing keys in temp directory /tmp/tmp1NWwV3

[node-1][DEBUG ] connected to host: node-1

[node-1][DEBUG ] detect platform information from remote host

[node-1][DEBUG ] detect machine type

[node-1][DEBUG ] get remote short hostname

[node-1][DEBUG ] fetch remote file

[node-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --admin-daemon=/var/run/ceph/ceph-mon.node-1.asok mon_status

[node-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node-1/keyring auth get client.admin

[node-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node-1/keyring auth get client.bootstrap-mds

[node-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node-1/keyring auth get client.bootstrap-mgr

[node-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node-1/keyring auth get-or-create client.bootstrap-mgr mon allow profile bootstrap-mgr

[node-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node-1/keyring auth get client.bootstrap-osd

[node-1][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node-1/keyring auth get client.bootstrap-rgw

[ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring

[ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmp1NWwV3

查看集群状态

[root@node-1 ceph-deploy]# ceph -s

cluster 4b5f675e-f8b0-4790-abf6-82804c6afbd1

health HEALTH_ERR

no osds

monmap e1: 1 mons at {node-1=10.188.169.121:6789/0}

election epoch 3, quorum 0 node-1

osdmap e1: 0 osds: 0 up, 0 in

flags sortbitwise,require_jewel_osds

pgmap v2: 64 pgs, 1 pools, 0 bytes data, 0 objects

0 kB used, 0 kB / 0 kB avail

64 creating

添加OSD¶

See also

查看可用磁盘:

ceph-deploy disk list

删除分区表,以用于 Ceph:

ceph-deploy disk zap node-1:vdb node-2:vdb node-2:vdc node-3:vdb

添加osd到集群:

ceph-deploy --overwrite-conf osd prepare node-1:/dev/vdb node-2:/dev/vdb node-3:/dev/vdb

查看集群状态:

[root@node-1 ceph-deploy]# ceph -s

cluster 4b5f675e-f8b0-4790-abf6-82804c6afbd1

health HEALTH_OK

monmap e1: 1 mons at {node-1=10.188.169.121:6789/0}

election epoch 3, quorum 0 node-1

osdmap e15: 3 osds: 3 up, 3 in

flags sortbitwise,require_jewel_osds

pgmap v28: 64 pgs, 1 pools, 0 bytes data, 0 objects

100 MB used, 284 GB / 284 GB avail

64 active+clean

其他¶

如果修改了/etc/ceph/ceph.conf配置文件,采用推送的方式将conf文件推送至各个节点:

ceph-deploy --overwrite-conf config push node-2 node-3

此时,需要重启各个节点的monitor服务,node-1为各个monitor所在节点的主机名:

systemctl restart ceph-mon@node-1.service

0为该节点的OSD的id,可以通过ceph osd tree查看:

systemctl start/stop/restart ceph-osd@0.service

配置Ceph作为块存储设备¶

配置客户端¶

确认客户机内核对RBD的支持

sudo modprobe rbd

echo $?

配置SSH信任

登录MON节点copy公钥到客户机:

echo "10.188.169.65 mitaka" >>/etc/hosts

ssh-copy-id root@mitaka

在MON节点上安装ceph程序到客户机[mitaka]上

cd /etc/ceph

ceph-deploy --username root install mitaka

推送ceph config到客户机

ceph-deploy config push mitaka

创建用户授予访问rbd存储池权限

ceph df

ceph auth list

ceph auth get-or-create client.rbd mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=rbd'

ceph auth list

为用户添加密钥

ceph auth get-or-create client.rbd | ssh root@10.188.169.65 sudo tee /etc/ceph/ceph.client.rbd.keyring

验证用户是否成功添加

ssh root@10.188.169.65

cat /etc/ceph/ceph.client.rbd.keyring >> /etc/ceph/keyring

ceph -s --name client.rbd

[root@mitaka ceph]# ceph -s --name client.rbd

cluster 772043b4-4110-472f-8015-dda34e85d1de

health HEALTH_OK

monmap e1: 3 mons at {mon-1=10.188.169.62:6789/0,osd-1=10.188.169.63:6789/0,osd-2=10.188.169.64:6789/0}

election epoch 6, quorum 0,1,2 mon-1,osd-1,osd-2

osdmap e14: 3 osds: 3 up, 3 in

flags sortbitwise

pgmap v611: 64 pgs, 1 pools, 0 bytes data, 0 objects

101 MB used, 284 GB / 284 GB avail

64 active+clean

创建ceph块设备¶

为rbd池创建1G大小RADOS块设备

rbd craete rbd1 --size 1G -p rbd --name client.rbd

查看创建情况

rbd ls --name client.rbd

rbd info rbd1 --name client.rbd

[root@mitaka ceph]# rbd info rbd1 --name client.rbd

rbd image 'rbd1':

size 1024 MB in 256 objects

order 22 (4096 kB objects)

mitaka_name_prefix: rbd_data.104074b0dc51

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

flags:

映射ceph块设备¶

映射块设备到client

rbd map --image rbd1 --name client.rbd

映射时报错:

[root@mitaka ceph]# rbd map --image rbd1 --name client.rbd

rbd: sysfs write failed

RBD image feature set mismatch. You can disable features unsupported by the kernel with "rbd feature disable".

In some cases useful info is found in syslog - try "dmesg | tail" or so.

原因是测试虚拟机 uname -r 3.10.0-327.28.3.el7.x86_64 目前内核版本 3.10仅支持layering可以修改/etc/ceph/ceph.conf添加一行 rbd_default_features = 1 这样之后创建的image 只有这一个feature,或者diable这个rbd镜像的不支持的特性:

rbd feature disable rbd1 exclusive-lock object-map fast-diff deep-flatten

查看被映射的块设备

[root@mitaka ceph]# rbd showmapped --name client.rbd

id pool image snap device

0 rbd rbd1 - /dev/rbd0

格式化并挂载使用

[root@mitaka ceph]# fdisk -l /dev/rbd0

Disk /dev/rbd0: 1073 MB, 1073741824 bytes, 2097152 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes

[root@mitaka ceph]# mkfs.xfs /dev/rbd0

meta-data=/dev/rbd0 isize=256 agcount=9, agsize=31744 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 mitakas=262144, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=4096 mitakas=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 mitakas=0, rtextents=0

[root@mitaka ceph]# mkdir /ceph-rbd1

[root@mitaka ceph]# mount /dev/rbd0 /ceph-rbd1/

[root@mitaka ceph]# df -h /ceph-rbd1/

Filesystem Size Used Avail Use% Mounted on

/dev/rbd0 1014M 33M 982M 4% /ceph-rbd1

[root@mitaka ceph]# dd if=/dev/zero of=/ceph-rbd1/test.file bs=1M count=200

200+0 records in

200+0 records out

209715200 bytes (210 MB) copied, 0.135379 s, 1.5 GB/s

[root@mitaka ceph]# ll -sh /ceph-rbd1/test.file

200M -rw-r--r--. 1 root root 200M May 22 03:59 /ceph-rbd1/test.file

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 | #!/bin/bash

function get_nr_processor()

{

grep '^processor' /proc/cpuinfo | wc -l

}

function get_nr_socket()

{

grep 'physical id' /proc/cpuinfo | awk -F: '{

print $2 | "sort -un"}' | wc -l

}

function get_nr_siblings()

{

grep 'siblings' /proc/cpuinfo | awk -F: '{

print $2 | "sort -un"}'

}

function get_nr_cores_of_socket()

{

grep 'cpu cores' /proc/cpuinfo | awk -F: '{

print $2 | "sort -un"}'

}

echo '===== CPU Topology Table ====='

echo

echo '+--------------+---------+-----------+'

echo '| Processor ID | Core ID | Socket ID |'

echo '+--------------+---------+-----------+'

while read line; do

if [ -z "$line" ]; then

printf '| %-12s | %-7s | %-9s |\n' $p_id $c_id $s_id

echo '+--------------+---------+-----------+'

continue

fi

if echo "$line" | grep -q "^processor"; then

p_id=`echo "$line" | awk -F: '{print $2}' | tr -d ' '`

fi

if echo "$line" | grep -q "^core id"; then

c_id=`echo "$line" | awk -F: '{print $2}' | tr -d ' '`

fi

if echo "$line" | grep -q "^physical id"; then

s_id=`echo "$line" | awk -F: '{print $2}' | tr -d ' '`

fi

done < /proc/cpuinfo

echo

awk -F: '{

if ($1 ~ /processor/) {

gsub(/ /,"",$2);

p_id=$2;

} else if ($1 ~ /physical id/){

gsub(/ /,"",$2);

s_id=$2;

arr[s_id]=arr[s_id] " " p_id

}

}

END{

for (i in arr)

printf "Socket %s:%s\n", i, arr[i];

}' /proc/cpuinfo

echo

echo '===== CPU Info Summary ====='

echo

nr_processor=`get_nr_processor`

echo "Logical processors: $nr_processor"

nr_socket=`get_nr_socket`

echo "Physical socket: $nr_socket"

nr_siblings=`get_nr_siblings`

echo "Siblings in one socket: $nr_siblings"

nr_cores=`get_nr_cores_of_socket`

echo "Cores in one socket: $nr_cores"

let nr_cores*=nr_socket

echo "Cores in total: $nr_cores"

if [ "$nr_cores" = "$nr_processor" ]; then

echo "Hyper-Threading: off"

else

echo "Hyper-Threading: on"

fi

echo

echo '===== END ====='

|